Tools and software

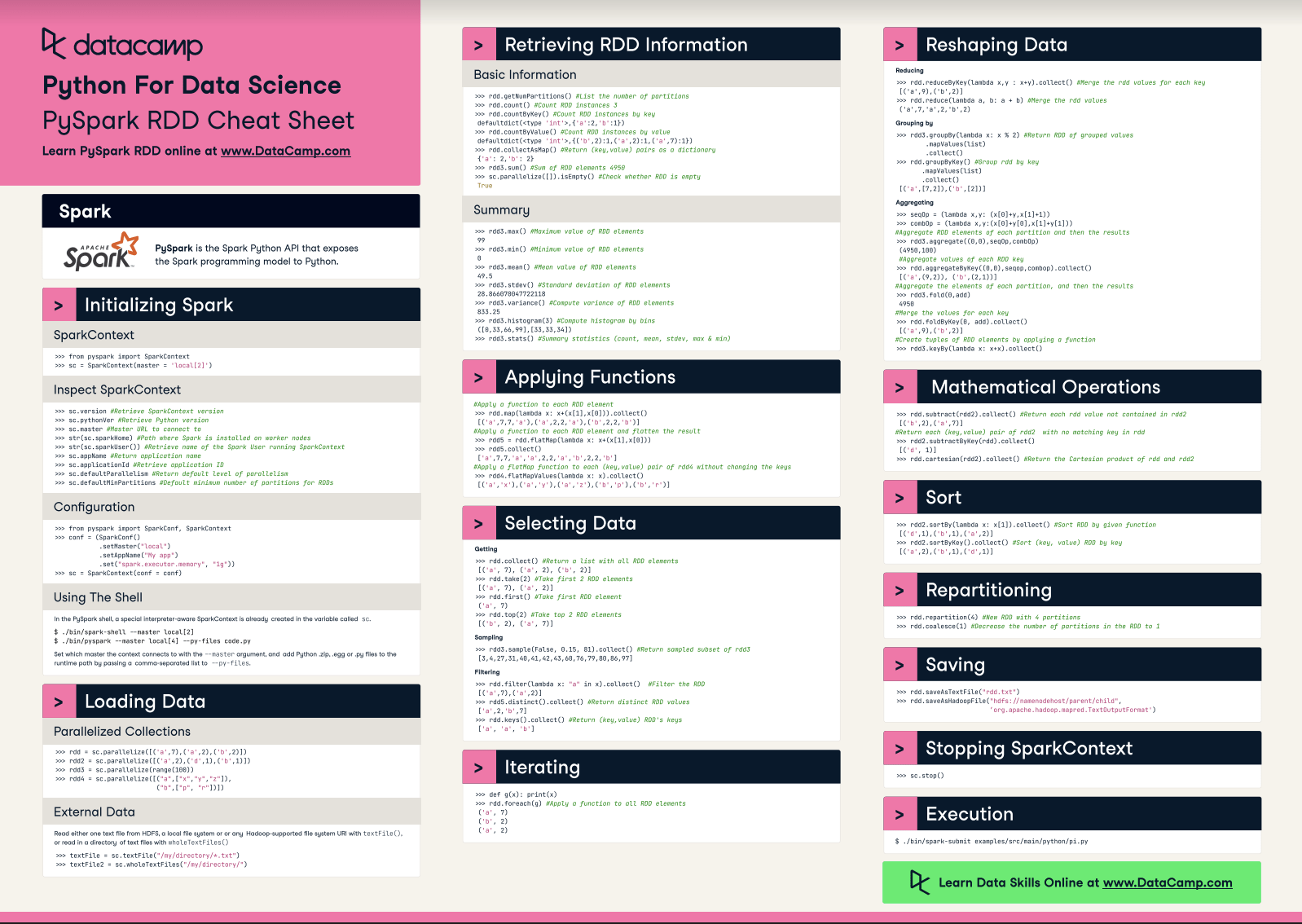

Introduction to PySpark: Learn from Datacamp

PySpark is a powerful Python library for data analysis. Datacamp is the best place to learn about PySpark, from the basics to more advanced topics. In this blog post, we will introduce you to PySpark and show you some of the ways it can be used for data analysis.

Table of Contents

What is PySpark?

Image Source: Link

Python is a widely used high-level programming language that enables you to develop software more quickly and with less code. PySpark is a Python library that makes it easy to interact with data.

PySpark can read data from HDFS, S3, and Flume; write data to HDFS, HBase, Spark SQL, Hive, S3, and Swift; graph data; and forecast future values. You can also use PySpark in combination with Apache Spark to speed up your analytics workflows.

In this article, we’ll introduce you to PySpark and show you some simple examples of how to use it. We’ll also provide some tips for getting started and advice on how to get the most out of PySpark.

What are the key features of PySpark?

Image Source: Link

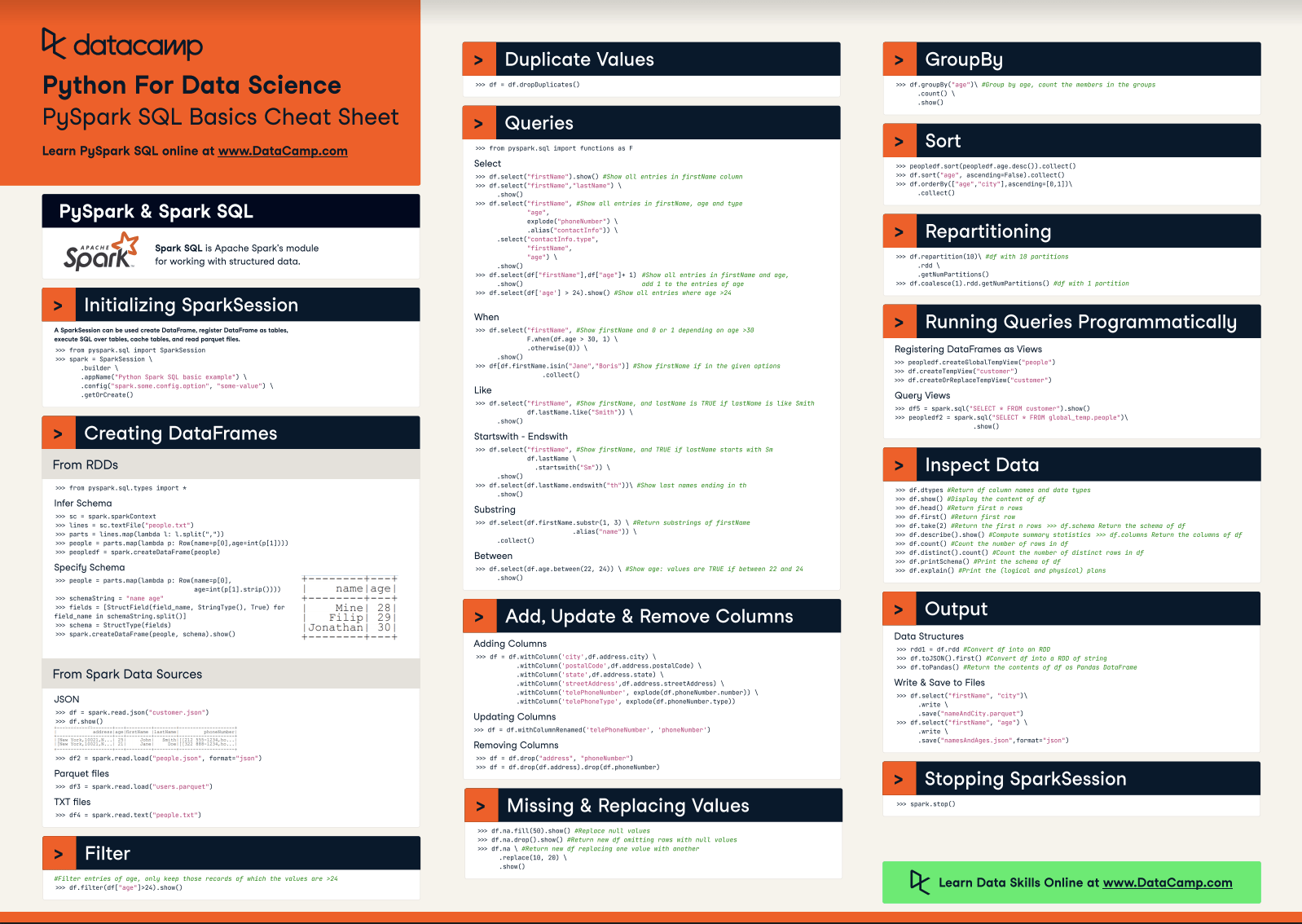

PySpark is a Python library for data analysis. It provides tools for data processing, data exploration, machine learning, and big data management. PySpark is designed to make it easy to work with large datasets.

PySpark can process large datasets quickly. It has a built-in parallel execution engine that can run multiple tasks on multiple cores. PySpark also has features for data analysis, such as Spark SQL and the Spark Streaming API. These allow you to query and analyze your datasets using familiar SQL and streaming language syntaxes, respectively.

PySpark also includes a variety of machine learning algorithms, including support for deep learning services such as TensorFlow Lite and Theano. You can use PySpark to train custom models using these algorithms or to deploy them into deep learning frameworks such as Facebook’s PyTorch.

Overall, PySpark makes it easy to analyze large datasets quickly and build custom models using machine learning techniques.

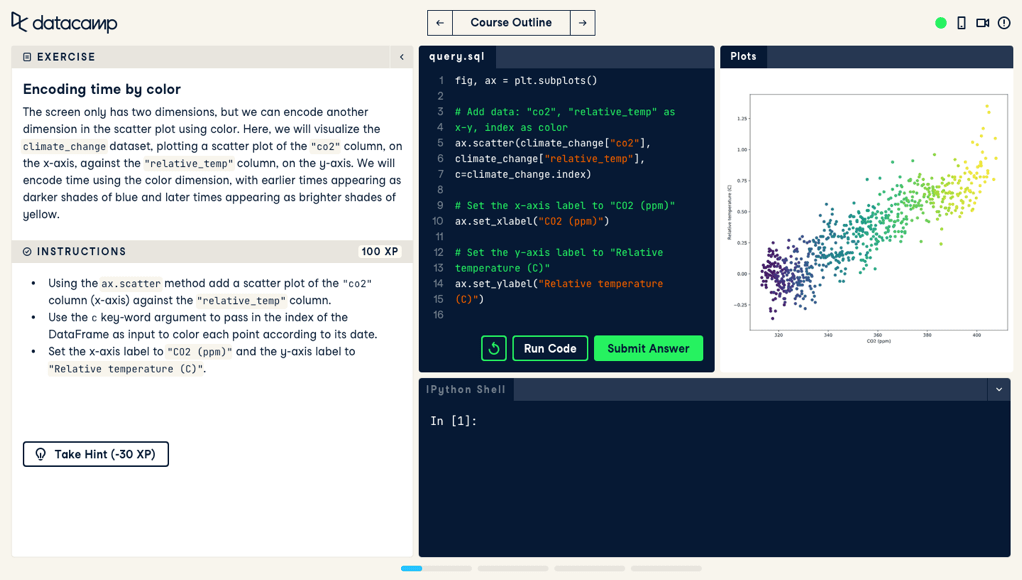

How can PySpark be used in data science projects?

Image Source: Link

PySpark is a Python library for data analytics. The library provides a comprehensive platform for data analysis and machine Learning. PySpark can be used to perform various tasks such as data loading, feature engineering, clustering, classification, and regression. PySpark also has built-in tools for text processing and time-series analysis.

One of the key benefits of using PySpark is that it offers a high level of abstraction over the underlying Spark engine. This makes it easy to write code that is reusable across different datasets and applications. PySpark also provides extensive support for data pre-processing and pipeline execution. This makes it ideal for performing complex data analysis tasks such as data cleaning or transformation.

Overall, PySpark is a powerful tool that can be used to develop advanced data science projects. It offers a robust platform that facilitates the implementation of complex algorithms and can handle large amounts of data easily.

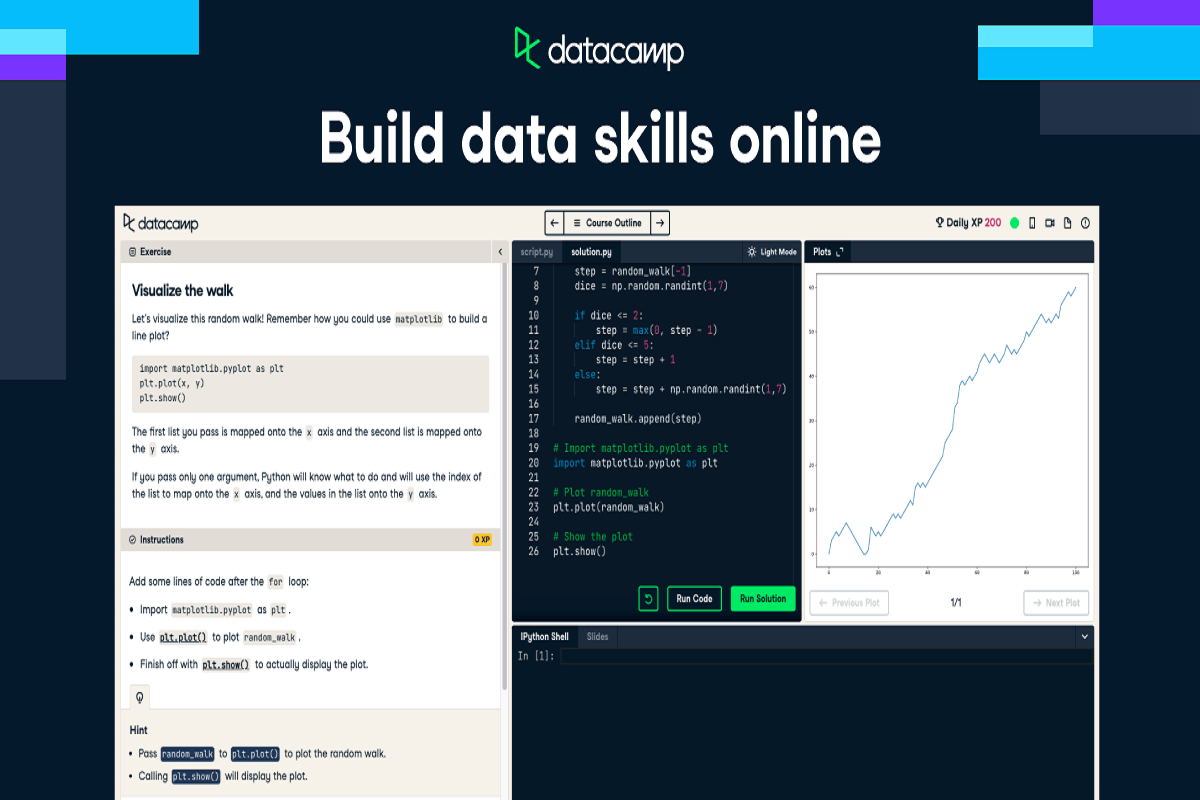

Conclusion

Image Source: Link

Datacamp is a well-known platform that provides online courses on data science, machine learning, and big data. Their PySpark course is one of their most popular offerings and it covers a wide range of topics in Spark. If you want to learn about Apache Spark and its various features, this is the course for you. The PySpark course at Datacamp offers a comprehensive introduction to Spark for beginners, so be sure to check it out if you are interested in learning more about this powerful tool.